Modelling, Mapping, Cleaning, and Normalizing data

Developed a standard script to remove special characters from data fields and identify duplicate data fields within the header row of the raw data. Browse Github to view code

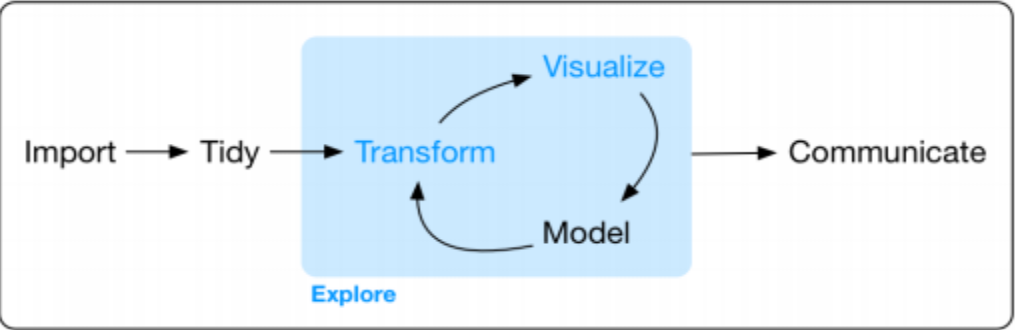

Understand and implement ETL process in onboarding data, work with third party extractors in performing ETL processes to be accessible by data processors. Perform analysis and profiling of data sources. Build and determine models to logically map, normalize, perform quality checks and review client data sets by follwoing the process below:

Develop pseudocode to define the programming logic to build requirements for logically mapping multiple data sources, for example claims, enrollment, cms, cclfs, and 837s to onboard multiple data source types such as Payer and EMR data

Onboard health data into production after data sets have been vetted, perform checks on the data for Consistency, Continuity, Completeness, Validity, Referential Integrity.

Communicate findings from querying health data from production tables to provide insights or investigate issues to generate reports.